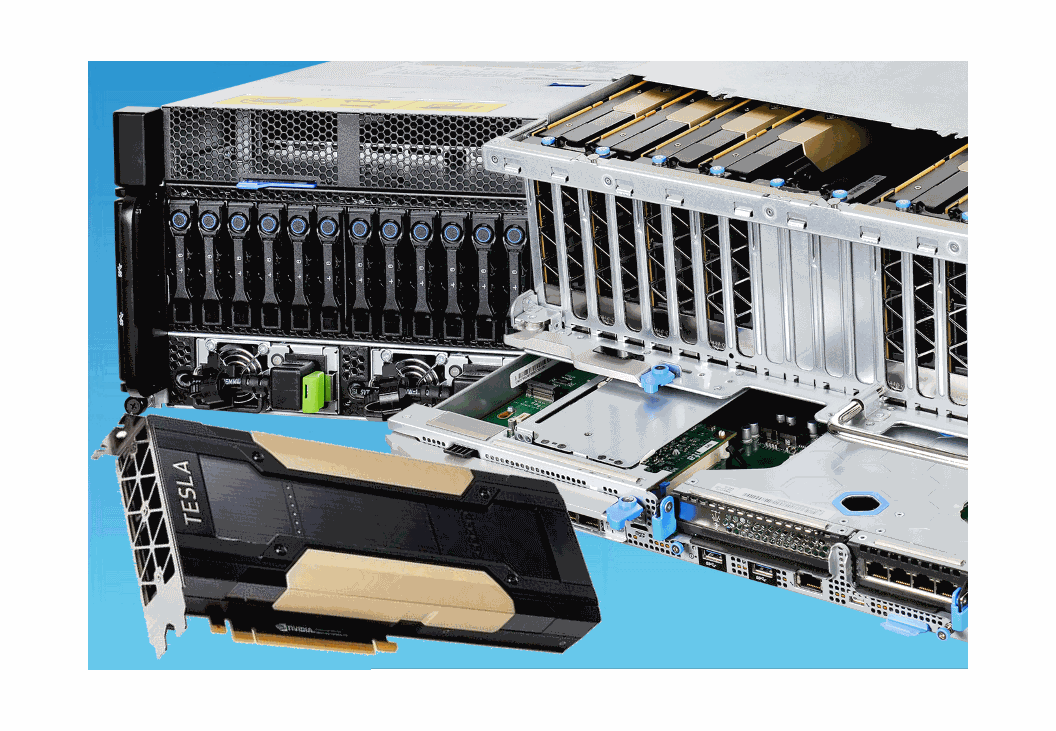

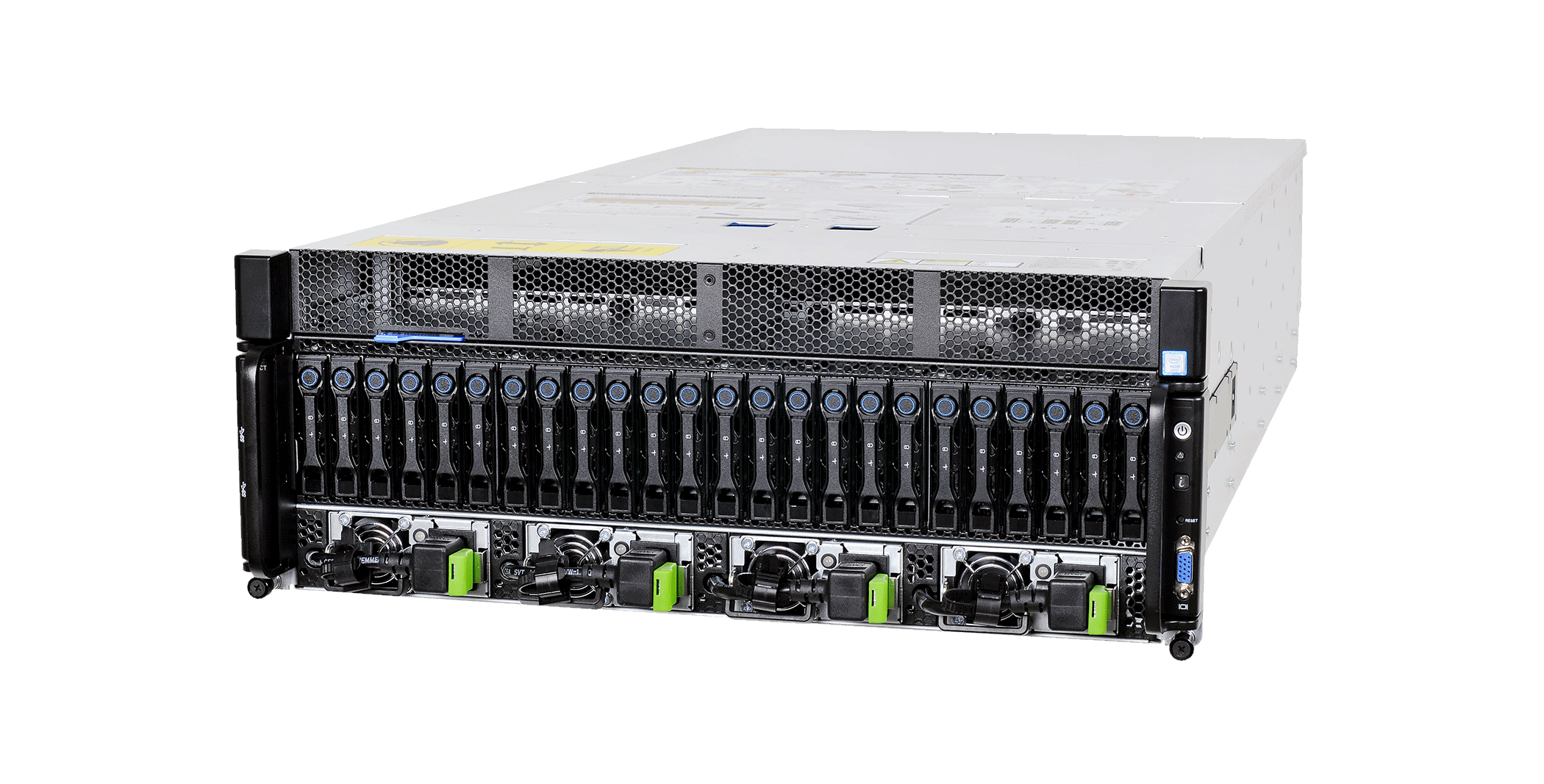

Mfalme S5G

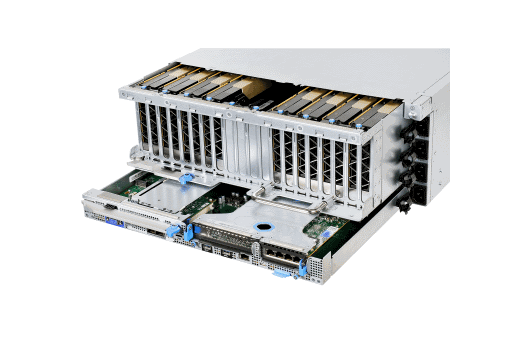

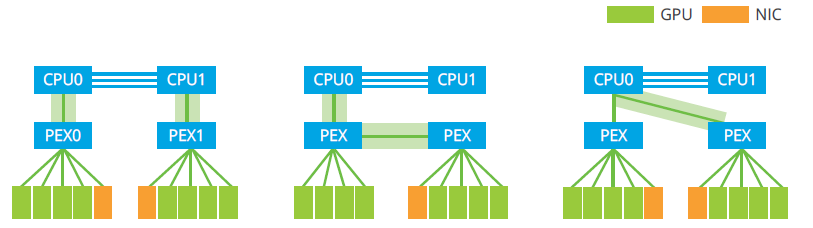

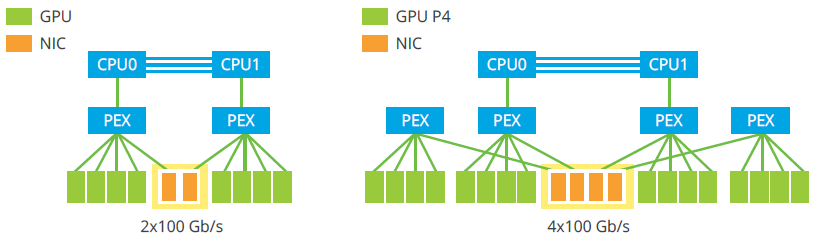

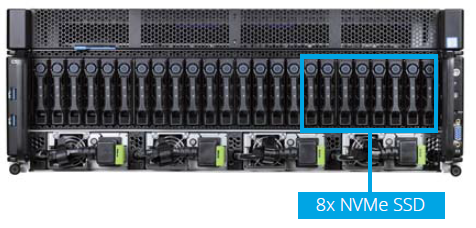

All-in-one Box Prevails over AI and HPC challenge NVIDIA® NGC Ready Server Up to 8x NVIDIA® Tesla® V100 with NVLink™ support up to 300GB/s GPU to GPU communication Up to 10x dual-width 300 Watt GPU or 16x single-width 75 Watt GPU support Diversify GPU topology to Conquer Any Type of Parallel Computing Workload Up to 4x100Gb/s High Bandwidth RDMA-enabled Network to Scale Out with Efficiency 8x NVMe Storage to Accelerate Deep Learning