Ndege QantaMicro X11C-8N

Description

Ndege QantaMicro X11C-8N

World’s Densest Micro Server

Introducing the worlds densest Micro Server, EVER. The QuantaMicro features up to eight (8) independent nodes as compute or GPU for parallel processing in 2RU.

With up to eight (8) independent nodes, each sled could be deployed with a single Intel Xeon E-2200 CPU with four (4) DDR4 DIMM slots or an NVIDIA T4 – universal deep learning accelerator ideal for distributed computing environments.

Storage Options

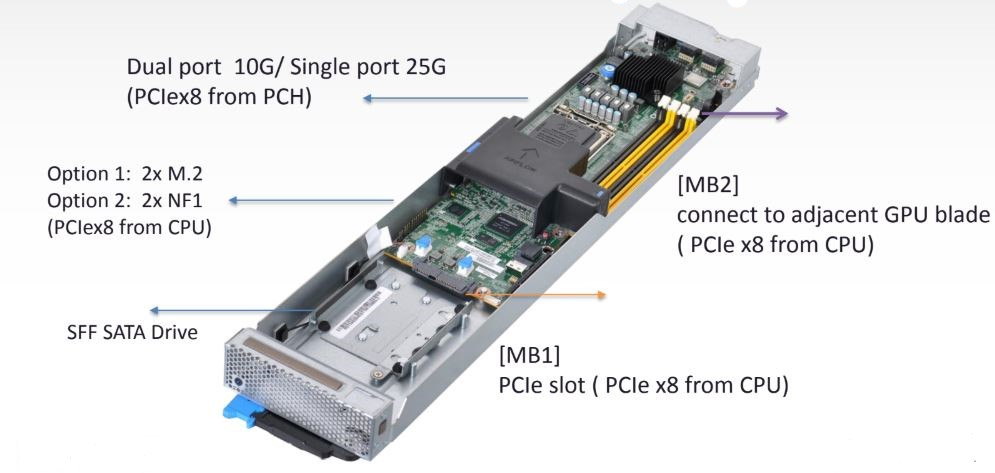

For storage, each sled has a 2.5″ SFF SATA drive bay and room for two PCIe M.2 storage devices.

One can use either two M.2 drives or two NF1 drives with the sled. Networking is provided by either dual 10GbE or a single 25GbE uplink.

The aggregated networking using a 10GbE scenario means that only 4x QSFP+ 40GbE uplinks are needed for 8 servers instead of 16x SFP+ uplinks.

Cables are reduced by another 7 by removing the need for individual out-of-band IPMI management cables.

Aside from the obvious density increase by fitting 8x nodes in 2U of space, the QuantaMicro solution also takes the cables required from 40 to 7 making maintenance significantly easier.

Compute GPU Options

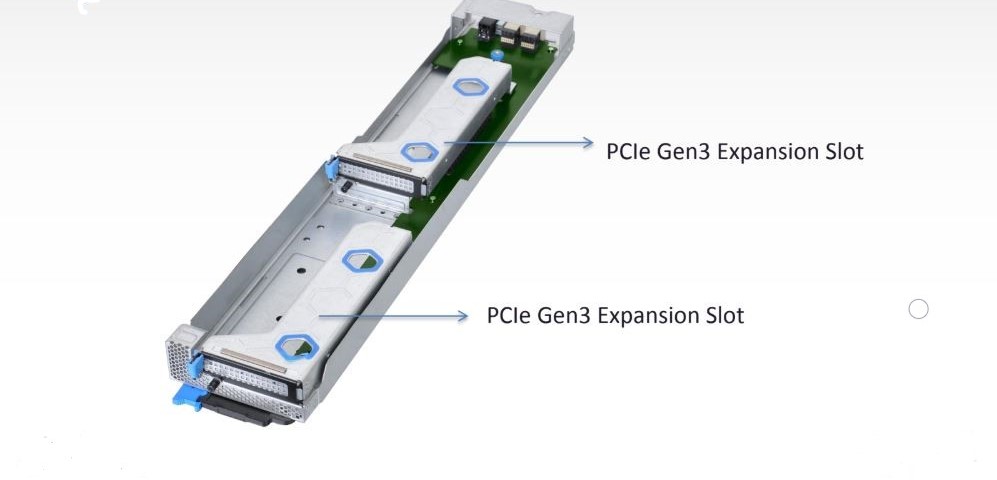

The X11C-8N also has an option to use a GPU sled. This has two expansion slots for low profile

PCIe GPUs that may include cards like the NVIDIA Tesla T4.

NVIDIA T4 is a universal deep learning accelerator ideal for distributed computing environments. Powered by NVIDIA Turing™ Tensor Cores, T4 provides revolutionary multi-precision performance to accelerate deep learning and machine learning training and inference, video transcoding, and virtual desktops. As part of the NVIDIA AI Platform, T4 supports all AI frameworks and network types, delivering dramatic performance and efficiency that maximize the utility of at-scale deployments.

Here is a quick look at the network topology with the dual 10GbE solution.

The 25GbE solution only utilizes a single uplink to a pass-through board